EXPLORING SQUIRREL HILL

Teammates: Alex Tsai, Sharon Yu

Role & Contributions: Storyboarding, wireframing, graphics, motion

Carnegie Mellon University | Winter 2016

Challenge

Create a wayfinding device that uses speech recognition as the sole form of input.

Outcome

A kiosk that recommends day trips in Squirrel Hill, Pittsburgh, based on a visitor's party size, interests and amount of available time to help them discover what Squirrel Hill has to offer.

BACKGROUND

Squirrel Hill is a residential neighborhood in Pittsburgh, PA that is a one stop shop for entertainment, food, and shopping. It offers a diverse range of experiences, with kosher brunch and soup dumplings on the same street. The busiest area of Squirrel Hill centers around Forbes and Murray Avenue. Recognizing that college students and other visitors typically frequent the same popular restaurants and and shops in that area, we wanted our wayfinding device to suggest the lesser known "hidden gems" of the place.

PROCESS

User flow storyboard:

Information Structure

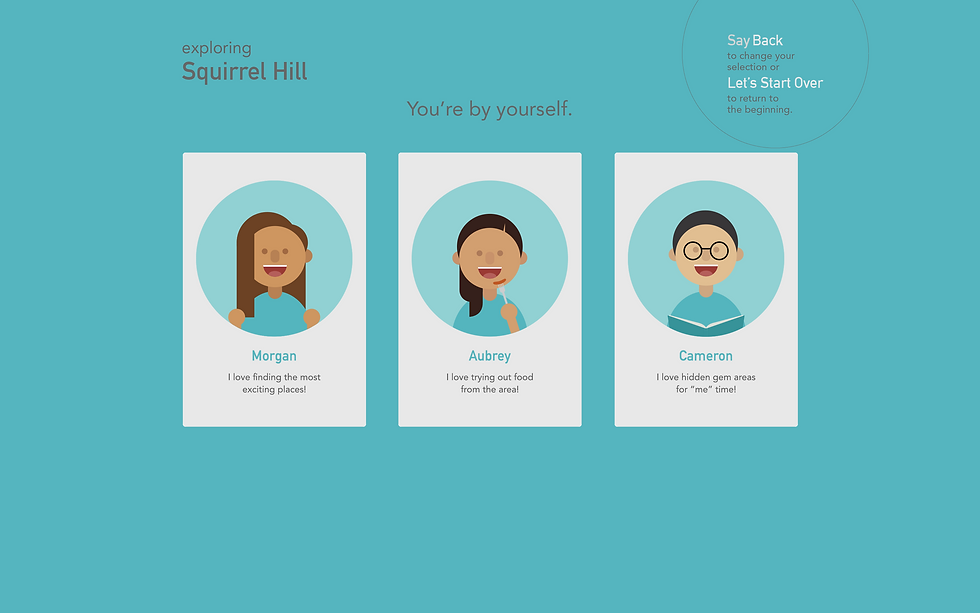

In order to recommend trips for places less traveled, we perused Yelp to make a list of possible destinations. First, we constructed some personas, which we loosely defined as people who like food, people who like excitement and new experiences, and people who enjoy quieter or intellectual activities. These were decided based on the kinds of activities available in Squirrel Hill.

We then wanted to identify the type of trip the visitor is having: a solo adventure, hanging out with friends, going on a date, or a spending time with family. We drew up a list of activities for each type of group/individual.

Finally, based on the amount of time they want to spend in the neighborhood, we provided some or all of the items on the list as recommendations.

Creating Content

We used p5.js to code the speech recognition functions and animate interactions. We decided to host our product on a kiosk in Squirrel Hill. An on-site kiosk would be highly accessible and provide a larger screen. We did not develop any mobile interfaces because we felt that downloading an application for the purpose of navigating a day trip was impractical, and given the minimal number of steps required to get a route, we guessed that lines and crowds around the kiosk would not be an issue.

Considering the free-forming nature of human speech and how much room there is for error, we were careful to set up questions to receive one-word responses. The interface itself supplements the questions with visual feedback to acknowledge the user's input and with playful graphics. Each step is simple and gives clear instructions. After the user confirms a satisfactory route, the kiosk displays a QR code to pull up Google Maps with directions to these places on the user's phone.

I created the character designs and co-created animations for our interface.

Our demo video shows a user stepping up to a kiosk in Squirrel Hill to get an itinerary for the day. These screen captures show a walkthrough our wayfinding product.

FINAL PRODUCT

LEARNING & IMPLICATIONS

The speech-based interface was an assigned constraint, so I got to think about interactions that were more particular to a voice UI. For example, we realized that the chances of error with voice input were high, so we designed for one-word answers. To do this, we needed to clearly define the information we were asking at each step.

-

Designing an interface for speech recognition, navigating its affordances and limitations

-

Organizing information into discrete steps to provide clear directions

-

Considering the context of environment and medium for a wayfinding problem

-

Creating an appropriate visual language for the content

-

Using p5 (JavaScript library) to create bouncing circles, animate gifs, and transition screens